The Complete Guide to Animal Pose Estimation in 2023: Tools, Models, Tutorial

Discovering animal pose estimation with Supervisely. Learn how to annotate animals using keypoints and State-of-the-Art neural networks.

Table of Contents

Introduction

Welcome to the world of animal pose estimation! Over the past few years, research in computer vision for animal pose estimation has expanded significantly in various directions. While early research focused on pose analysis of humans and pets, today, we are able to perform pose estimation even for wild animals and insects.

But if we compare it to human pose estimation, which is really accurate and can handle tricky situations, animal pose estimation is still getting started. There's a lot more to explore and improve in this field.

In this post you'll find out:

-

What is pose estimation and how it is used in different fields

-

Why animal pose estimation is trickier than human pose estimation

-

Easy steps to label and export your data with keypoints for animals in Supervisely

Stick with us to see how computers are helping us understand how animals move and behave in the wild, on farms, and beyond.

Video tutorial

In this video we demonstrate how to get most of the pose estimation tools in Supervisely. You will learn how to:

-

Create a keypoints class and build a skeleton to form a pose structure.

-

Use ViTPose model for animal pose estimation.

-

Easily annotate large datasets with just one tool, covering object detection and pose estimation in a single go.

What is Pose Estimation?

Pose estimation is a computer vision task that pinpoints the position and alignment of people, animals, or objects. It's all about identifying specific points on their bodies, like joints or body parts for humans, and features like paws, tails, or hooves for animals. These models can even track multiple subjects at once. Sometimes, pose estimation outshines object detection by providing precise coordinates for key points on the subject.

Example of human pose estimation

Example of human pose estimation

Pose estimation finds utility in various domains, e.g.:

- Agriculture: Monitoring livestock body pose and behavior for animal health.

- Retail: Analyzing customer interactions with products for store optimization.

- Gaming: Capturing lifelike character movements in video games.

- Sports: Enhancing athlete training and performance analysis.

- Healthcare: Monitoring patient mobility and aiding rehabilitation and much more.

Animal Pose Estimation

While it may seem that there are no significant differences between human and animal pose estimation, are there any?

Animal pose estimation, like human pose estimation, involves determining the position of various body parts in an image or video. However, animal pose estimation is more challenging because of the diversity of species and the unique anatomical features of each animal, as well as the unique poses they adopt that cannot be replicated by humans. E.g., pose estimation for a giraffe, a goat, and a beaver would require three completely different skeletons.

Accurately identifying the pose of an animal is important in areas such as animal tracking, behavioral analysis, species identification, and migration studies. The task of animal pose estimation is complicated by limited access to data and the complexity of animal behavior.

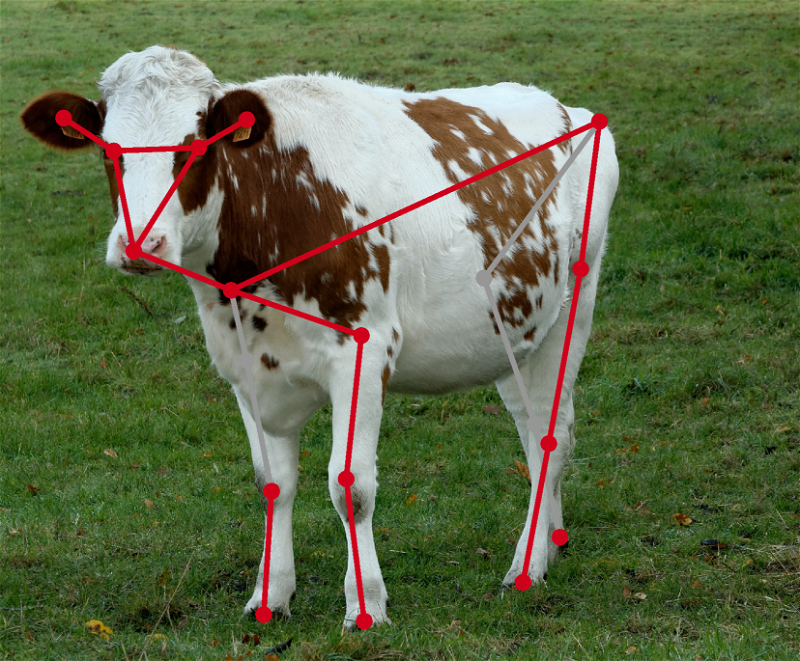

Example of animal pose estimation

Example of animal pose estimation

Labeling of Animals With Keypoints in Supervisely: everything you need to know

Detecting an animal's pose using the keypoints means identifying and labeling specific anatomical points on the animal's body to understand its current posture and position. These can be ear tips, tail tips, joints, and others.

We delve into the tools available in Supervisely for both manual and automatic labeling of animal poses.

Definition of keypoint skeleton template

Choose animal: Decide on an animal species for which you want to label the pose.

Create a keypoint shape class: You can either do this in advance in the Classes tab or from the labeling window.

Identify keypoints: Study the animal's anatomy and identify the keypoints that best describe the animal's pose. For example, for a cow, these might be points on the nose, eyes, ears, knees etc.

Arrange keypoints: To assist you in locating the points, you can upload an image of your selected animal. Start by adding keypoints to the appropriate places on the animal using the add note button and then connect them using the add edge button. Don't forget to name the points and save the class.

Manual annotation tools

Start labeling the images: Place a pre-defined skeleton on the object. To do this, click once on the edges of the object.

Correct keypoints: Correct the existing points using Drag a point to move, Disable/Enable point and Remove point buttons so that they repeat the animal's pose.

Repeat these manipulations from image to image until you have marked all the data you need. Try to be as accurate as possible so that the keypoints accurately describe the animal's position.

⚠️ Remember that manually labeling an animal's pose using keypoints can be time-consuming, especially for complex poses and species. However, it also gives you more control over the results and allows you to more accurately describe the anatomical features of the animals in the image.

The gray line is connected to the concealed (non-visible) sections of the cow

The gray line is connected to the concealed (non-visible) sections of the cow

AI-assisted annotation with VitPose

Explore our blog post ViTPose — How to use the best Pose Estimation Model on Humans & Animals to get in-depth knowledge about this state-of-the-art pose estimation model.

Remember that to run GPU-dependent apps on your machine you need to connect a computer with a GPU to your Supervisely account. You can connect multiple computers for enhanced computational resources. Watch a 1.5-minute how-to video to learn more

Below are the steps on how to run VitPose it in Supervisely:

Run Serve ViTPose app. Go to Ecosystem page and find the app Serve ViTPose. Also, you can run it from the Neural Networks page from the category Images → Pose Estimation (Keypoints) → Serve.

Select pre-trained model ViTPose+ for animal pose estimation and the type of animal you're interested in, press Serve button and wait for the model to deploy.

⚠️ If you select a model for animal pose estimation, you will also see a list of supported animal species and basic information about the pitfalls of animal pose estimation.

Apply ViTPose to images in the labeling tool. Run NN Image Labeling app, connect to ViTPose, create a bounding box with the animal's type name, and click on Apply the model to ROI.

NN Image Labeling

Use deployed neural network in labeling interface

For the animal pose estimation task, you need to create a bounding box with the class name, which is presented in the list of supported animal species; keypoints skeleton class with the name {yourclassname}keypoints will be created. Otherwise, the keypoints skeleton class with the name animalkeypoints will be created.

Correct keypoints. If there is only a part of the target object on the image, then you can increase the point threshold in app settings to get rid of unnecessary points. Correct the existing points using Drag a point to move, Disable/Enable point, Remove point buttons so that they repeat the animal's pose.

Autolabeling pipeline: detection using YOLOv8 + pose estimation using ViTPose

-

Follow the previous steps and run

ViTPose app. -

Run YOLOv8 app for detection:

Supervisely AppServe YOLOv8 | v9 | v10 | v11

Deploy YOLOv8 | v9 | v10 | v11 as REST API service

Go to Ecosystem page and find

Serve YOLOv8app. You can also run it from the Neural Networks page from the categoryImages→Object Detection→ServeChoose one of the pre-trained models and the

Object detectiontask type. PressServe buttonand wait for the model to deploy. -

Run the App "Apply Detection and Pose Estimation Models to Images Project:

Apply Detection and Pose Estimation Models to Images Project

Label project images using detector and pose estimator

Go to Ecosystem page and find the app Apply Detection and Pose Estimation Models to Images Project. Also, you can run it from the Neural Networks page from the category Images → Pose Estimation (Keypoints) → Inference interfaces. This App allows you to label images using Detection Model and additionally adds poses to bounding boxes using Pose Estimation Model.

Configure the App settings (for more details refer to the video tutorial)

Let's explore the outcomes achievable with our autolabeling pipeline. As evident, the model produces highly accurate results, automatically labeling even the poses of small and blurred animals with precision 😍

It's time to harvest — how to export your data

Export images and/or annotations in Supervisely format

Download images, projects, or datasets in Supervisely JSON format. It is possible to download both images and annotations or only annotations.

Export only labeled items

Export only labeled items

Export only labeled items and prepares downloadable tar archive

App exports only labeled items from the project in Supervisely format and prepares a downloadable tar archive. Unlabeled items will be skipped.

Conclusion

The field of animal pose estimation has seen significant advancements in recent years, expanding its applications from humans and pets to encompass wild animals and insects. However, it's important to note that when compared to human pose estimation, which boasts high accuracy and versatility in complex scenarios, animal pose estimation is still in its early stages of development.

The uniqueness of each animal species, their varied anatomical features, and the diversity of poses they can assume make this field particularly challenging. Nevertheless, animal pose estimation holds immense potential in areas such as agriculture, where it can help monitor livestock health and much more.

In this blog post, we've explored the intricacies of labeling animal poses using keypoints in Supervisely, offering both manual and AI-assisted annotation methods. The use of cutting-edge models like ViTPose further enhances the accuracy and efficiency of animal pose estimation.

With Supervisely's autolabeling pipeline, we've witnessed impressive results, showcasing the precision of automatically labeling even small and blurred animal poses. Finally, we've discussed the process of exporting data in Supervisely format, emphasizing the practicality and versatility of the tools available for this purpose. In essence, animal pose estimation is a burgeoning field with tremendous potential, and as technology continues to advance, we can expect even more exciting developments in the future.

Supervisely for Computer Vision

Supervisely is online and on-premise platform that helps researchers and companies to build computer vision solutions. We cover the entire development pipeline: from data labeling of images, videos and 3D to model training.

The big difference from other products is that Supervisely is built like an OS with countless Supervisely Apps — interactive web-tools running in your browser, yet powered by Python. This allows to integrate all those awesome open-source machine learning tools and neural networks, enhance them with user interface and let everyone run them with a single click.

You can order a demo or try it yourself for free on our Community Edition — no credit card needed!