Introducing Video Annotation 3.0

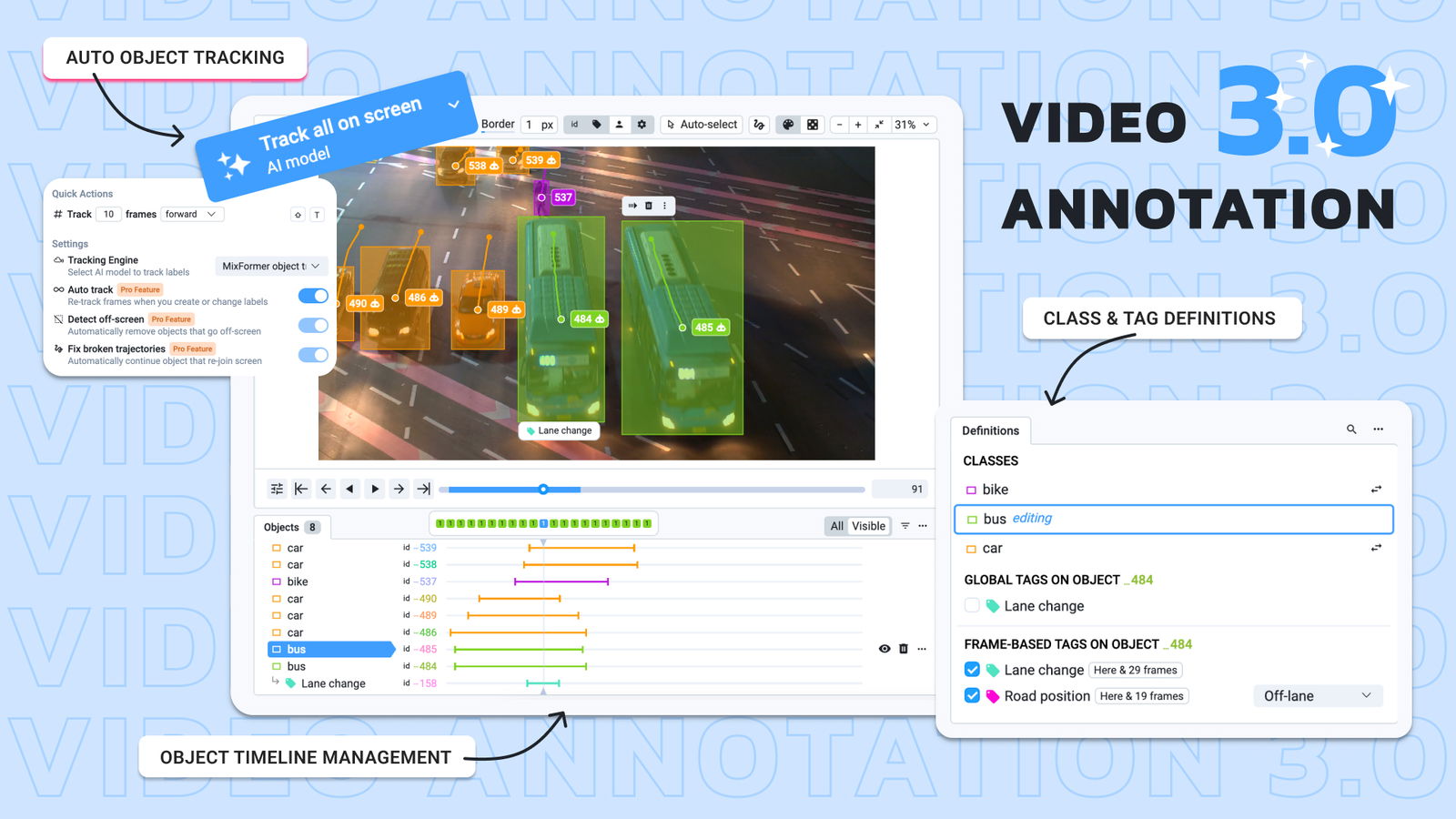

Our largest update so far, with AI-powered auto tracking, enhanced tag management, and a sleek new design for faster, smarter video labeling.

Table of Contents

Introduction

Video labeling is a much more complex task than image labeling. It's not just about labeling multiple frames; you also need to consider the relationships between those frames, for example when tracking objects or tagging specific segments. But don't worry, our video annotation toolbox has always been top-tier. Over the past three years, it's been used across a range of industries to tackle these challenges without splitting videos into individual frames.

One of the standout features is our timeline view, which allows for precise control over where labels and tags are placed. We also provide integrated object tracking neural networks that dramatically speed up the labeling process by automating much of the tracking work.

However, after gathering feedback from our users and reflecting on our own experience, we’ve identified areas for improvement. AI technology, especially neural networks, has evolved significantly. It’s no longer just about predicting the next bounding box for an object — AI can do so much more. Plus, while having a single timeline is fantastic, there’s real value in being able to view the bigger picture: seeing all objects and attached tags in one clear view.

After lots of hard work, we’re thrilled to introduce Supervisely Video Annotation Toolbox 3.0 — our biggest update yet. It’s smarter, faster, and designed to be the best tool on the market. 🔥

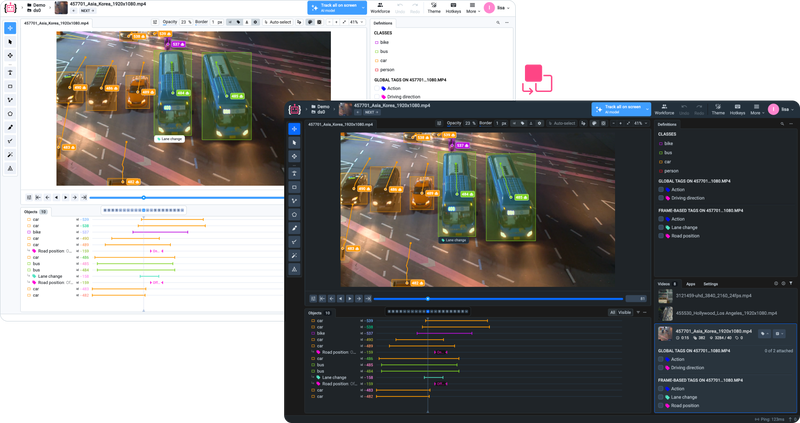

Video Annotation Toolbox 3.0

Video Annotation Toolbox 3.0

Auto Tracking

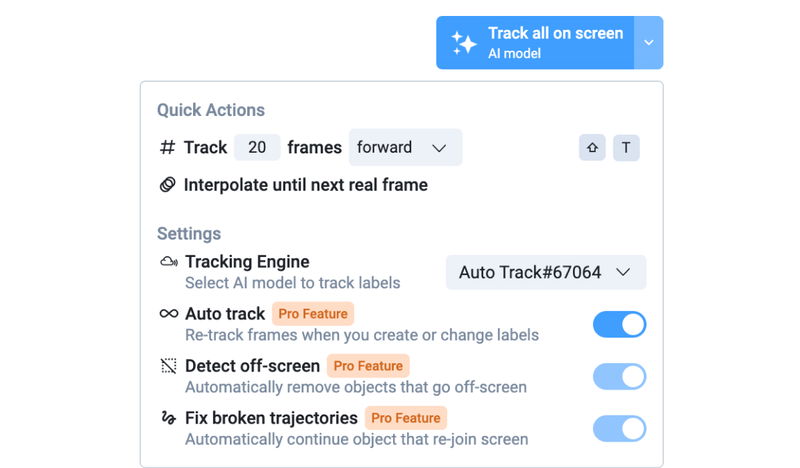

Let’s talk about one of the coolest features of this update: Auto Tracking. This smart functionality is powered by Supervisely Apps that work on top of existing tracking apps from our ecosystem, such as MixFormer. Auto Tracking intelligently selects the right model based on the type of geometry you’re working with — whether it’s a bounding box, skeleton, mask, or other types.

MixFormer object tracking

CVPR2022 SOTA video object tracking

As you move through your video or modify labels, Auto Tracking predicts labels automatically. But it gets better! The feature is now enhanced with the latest AI developments. For example, it can detect when an object leaves the scene and stop tracking it.

This AI-powered automation means a massive boost in labeling speed. In our tests, we saw a 500% increase in labeling efficiency and even more. And if you need something a bit simpler, Auto Tracking also supports interpolation, which remains useful in many scenarios.

Combined with AI models that can pre-detect objects in a scene, video labeling becomes so easy that you’ll barely need to create labels manually.

You can try this feature on our free community server or contact us for a trial!

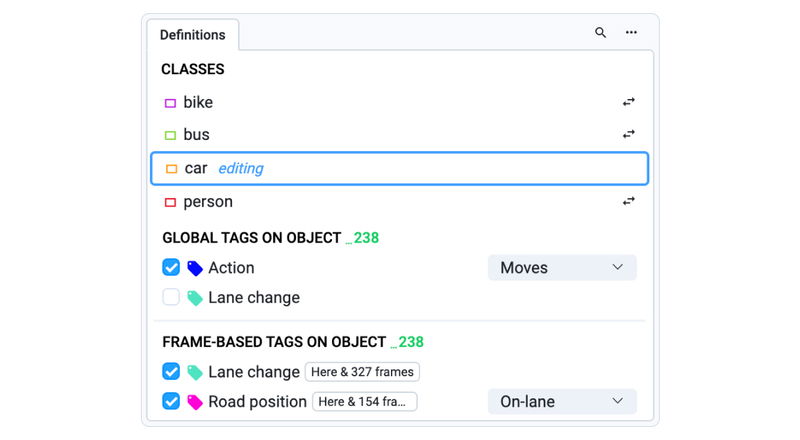

New Definitions Panel

In Video Toolbox 3.0, the Definitions Panel has been revamped for video labeling, building on what we’ve previously introduced for image labeling. It’s even more useful for video projects.

Definitions panel

Definitions panel

Just like with image labeling, you can now quickly search for classes and start labeling right away without needing to manually select the appropriate tool. All your classes of labels are presented clearly on your screen for fast access.

For video, we’ve added the ability to assign tags globally or frame-by-frame. Global tags can be applied to entire videos or specific objects, while frame-based tags let you mark specific ranges. You can even configure whether a tag is assigned only to the video, only to objects, or both. Global tags are assigned as usual: to the selected annotation object or to the current video, if none selected.

In previous versions, this was done by clicking the "clip" icon near the tag.

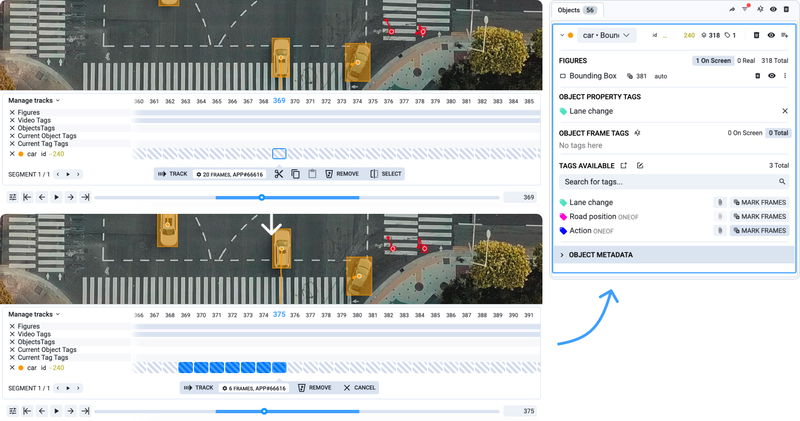

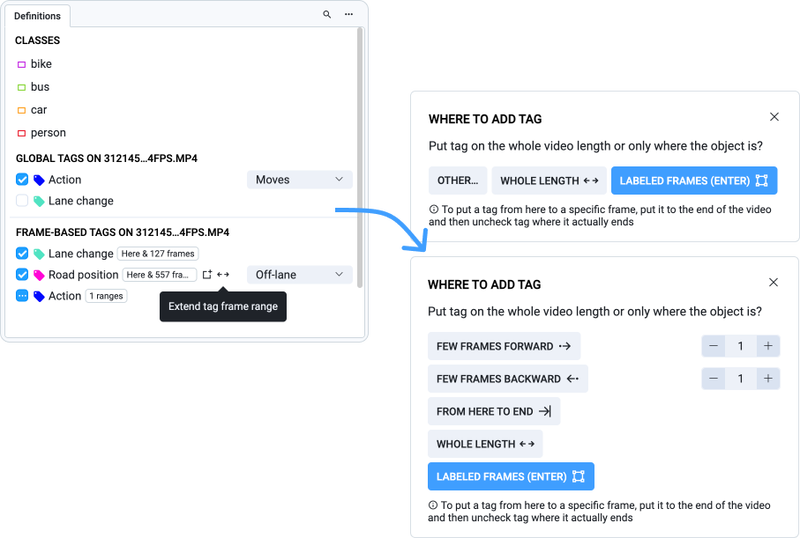

Tagging Frames Made Easy

Frame-based tagging used to require quite a few steps. You had to click the "mark frames" button, select a tag, and manually mark the range on a zoomed timeline. Assigning the same tag across multiple segments would create multiple instances, which added complexity when you wanted to edit or review them:

Tagging in Video Annotation 2.0 (previous version)

Tagging in Video Annotation 2.0 (previous version)

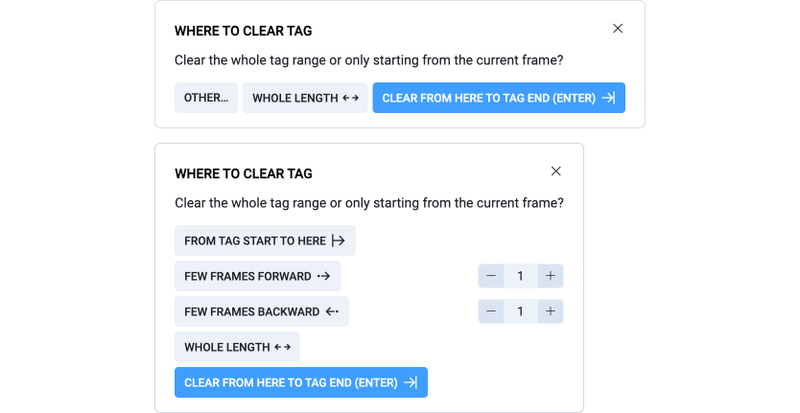

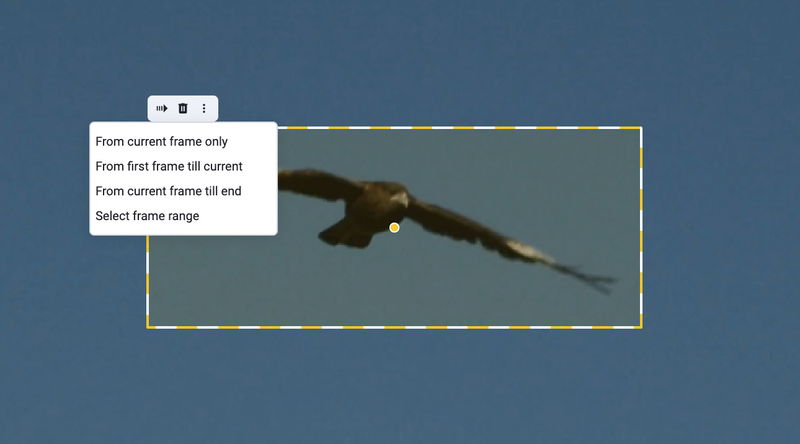

In Video Toolbox 3.0, tagging frames is far more intuitive. Simply check the box for a tag on the current frame, and a modal window will appear asking where you want to place it. By default, it will apply from the current frame to the end, which covers most cases.

Click checkbox to start tag range

Click checkbox to start tag range

This creates a new segment of the tag assigned to the selected annotation object or the current video. Other options include assigning it from frame 0 to the video end or specifying an exact length.

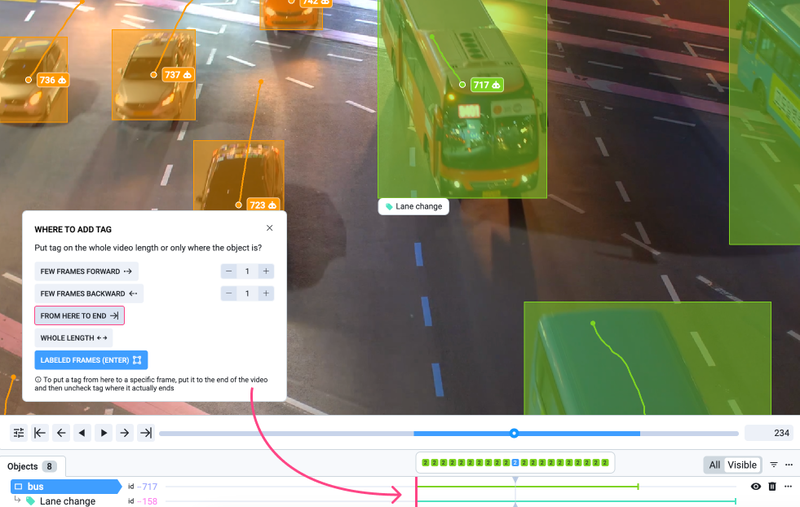

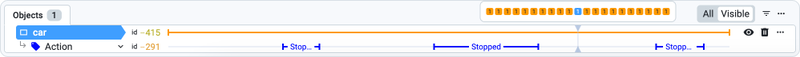

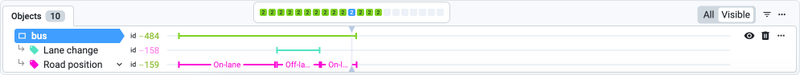

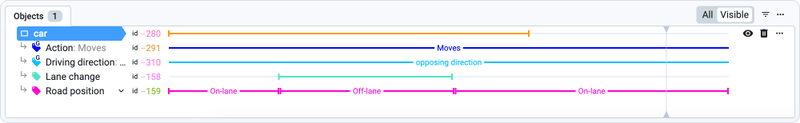

Timeline helps to visually see where tag ranges are

Timeline helps to visually see where tag ranges are

Need to stop the tag at a certain frame? Just uncheck the box, and you’ll be prompted with an option to clear it from that point forward.

This new method simplifies the process — just watch the video, check when the tag starts, and uncheck when it ends. No more fiddling with zoomed timelines or selecting specific modes. And if a tag comes and goes throughout the video, you can easily add and remove it as needed.

Modifying the tag value works similarly — just hover over the tag value input or double-click the segment in the Objects & Tags panel to adjust it. By default, we’ll split the tag into a new segment, making changes quick and straightforward.

You can even attach the same tag to multiple frames if needed, which is handy for scenarios like consensus labeling. We’ve also added an "extend" button for quick adjustments to tag ranges without needing to rewind through the video.

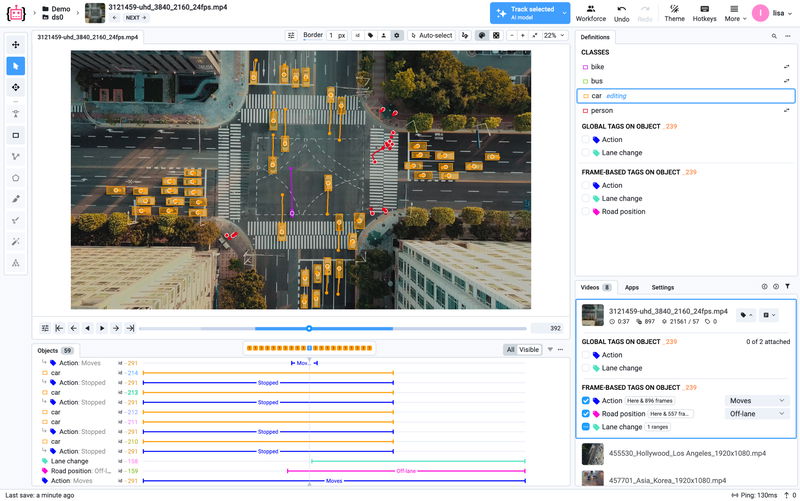

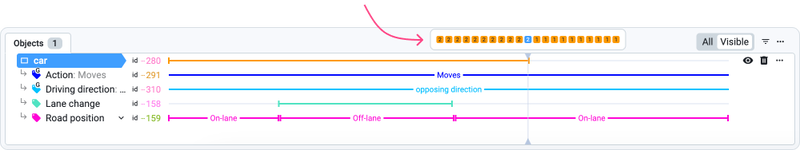

New Timelines with Objects & Tags

Another huge improvement in Video Toolbox 3.0 is the new Objects & Tags panel. Instead of relying on a single, zoomed-in timeline (which only shows about 50 frames at a time), this panel gives you a clear overview of all objects and tags throughout the entire video. You can easily jump to specific segments, adjust tag values, or use various actions from the context menu.

We’ve kept the floating zoomed timeline as well, but now it’s much smaller and designed to help you focus on precision while the Objects & Tags Panel gives you the full picture. You may click on the interesting frame or use your mouse scroll.

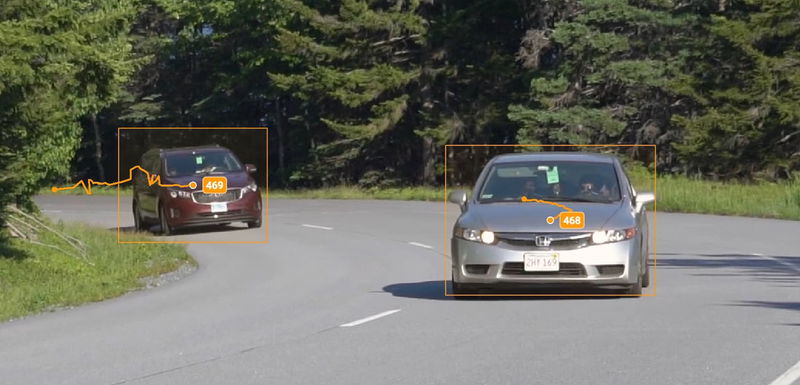

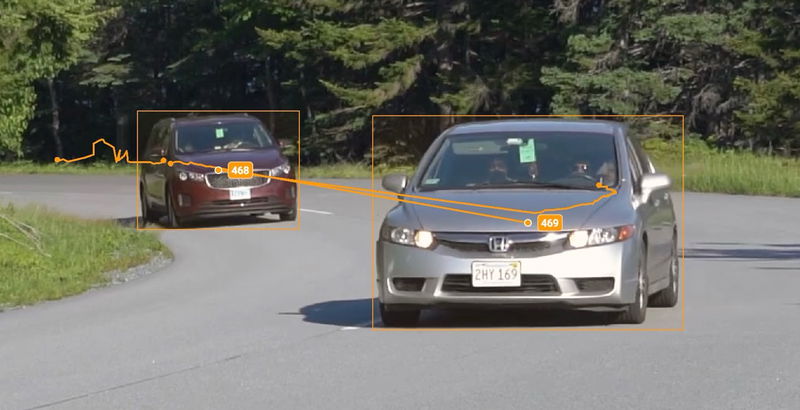

Trajectories

When tracking objects, understanding their movement from frame to frame is crucial. Labelers or AI models can sometimes confuse objects and assign bounding boxes incorrectly. To prevent these issues, enable the new Trajectories view. It visualizes object movement and is especially useful with static cameras. This makes it easy to spot mistakes, as you'll clearly see how trajectories jump between different objects.

Normal trajectories

Normal trajectories

Detection mistake

Detection mistake

Quick Actions

In Supervisely Video Annotation 3.0, with hundreds of objects to label across thousands of frames, every mouse movement matters. Even moving the cursor across the screen takes valuable time. To speed up your workflow, we’ve added a floating quick actions panel that appears right next to the annotation object. With minimal effort, you can quickly perform tasks like moving a bounding box to another object, deleting it from the current frame onward, and much more.

Quick actions panel

Quick actions panel

White Theme

We’re also introducing a brand new white theme in this update! Whether you prefer a light interface or the original dark mode, you can now switch between them by clicking the "Theme" button in the top menu. The white theme provides a cleaner look and is perfect for users who prefer a lighter workspace for extended work sessions.

Dark and light themes

Dark and light themes

Conclusion

We’re super excited to bring you Supervisely Video Annotation Toolbox 3.0! With all the improvements we’ve added, from AI-powered auto tracking and the more intuitive tag management, to the revamped Definitions panel and sleek new white theme, we’ve made it easier and faster than ever to label videos with precision. We’ve listened to your feedback and made sure this version is packed with features that make a real difference in your workflow.

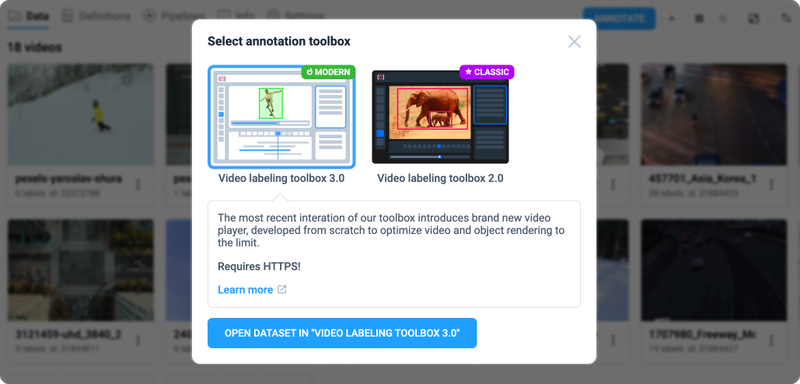

But don’t worry if you’re attached to the previous version – you can still switch back to it anytime from the version selection modal.

Select new or previous versions

Select new or previous versions

How to Try It?

Excited to explore the features of the all-new Video Annotation 3.0? It's already available on our free community server, complete with pre-deployed AI Auto Tracking!

Want to test your own AI models or have questions? Reach out to us for a demo call!

Supervisely for Computer Vision

Supervisely is online and on-premise platform that helps researchers and companies to build computer vision solutions. We cover the entire development pipeline: from data labeling of images, videos and 3D to model training.

The big difference from other products is that Supervisely is built like an OS with countless Supervisely Apps — interactive web-tools running in your browser, yet powered by Python. This allows to integrate all those awesome open-source machine learning tools and neural networks, enhance them with user interface and let everyone run them with a single click.

You can order a demo or try it yourself for free on our Community Edition — no credit card needed!